how to solve langgraph or langchain init_chat_model authentication error

1. Purpose

This post aims to solve the authentication error when using langgraph or langchain init_chat_model.

Traceback (most recent call last): File "/Users/bswen/projects/PythonProjects/langchain-rag1/langgraph/basic/stategraph1.py", line 61, in <module> stream_graph_updates(user_input) File "/Users/bswen/projects/PythonProjects/langchain-rag1/langgraph/basic/stategraph1.py", line 47, in stream_graph_updates for event in graph.stream({"messages": [{"role":"user", "content": user_input}]}): ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/Users/bswen/projects/PythonProjects/langchain-rag1/.venv/lib/python3.12/site-packages/langgraph/pregel/__init__. File "/Users/bswen/projects/PythonProjects/langchain-rag1/langgraph/basic/stategraph1.py", line 29, in chatbot return {"messages": [llm.invoke(state["messages"])]} ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/Users/bswen/projects/PythonProjects/langchain-rag1/.venv/lib/python3.12/site-packages/langchain_core/language_models/chat_models.py", line 371, in invoke self.generate_prompt( File "/Users/bswen/projects/PythonProjects/langchain-rag1/.venv/lib/python3.12/site-packages/langchain_core/language_models/chat_models.py", line 956, in generate_prompt return self.generate(prompt_messages, stop=stop, callbacks=callbacks, **kwargs) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/Users/bswen/projects/PythonProjects/langchain-rag1/.venv/lib/python3.12/site-packages/langchain_core/language_models/chat_models.py", line 775, in generate self._generate_with_cache( File "/Users/bswen/projects/PythonProjects/langchain-rag1/.venv/lib/python3.12/site-packages/langchain_core/language_models/chat_models.py", line 1021, in _generate_with_cache result = self._generate( ^^^^^^^^^^^^^^^ File "/Users/bswen/projects/PythonProjects/langchain-rag1/.venv/lib/python3.12/site-packages/langchain_deepseek/chat_models.py", line 296, in _generate return super()._generate( ^^^^^^^^^^^^^^^^^^ File "/Users/bswen/projects/PythonProjects/langchain-rag1/.venv/lib/python3.12/site-packages/langchain_openai/chat_models/base.py", line 973, in _generate response = self.client.create(**payload) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/Users/bswen/projects/PythonProjects/langchain-rag1/.venv/lib/python3.12/site-packages/openai/_utils/_utils.py", line 287, in wrapper return func(*args, **kwargs) ^^^^^^^^^^^^^^^^^^^^^ File "/Users/bswen/projects/PythonProjects/langchain-rag1/.venv/lib/python3.12/site-packages/openai/resources/chat/completions/completions.py", line 925, in create return self._post( ^^^^^^^^^^^ File "/Users/bswen/projects/PythonProjects/langchain-rag1/.venv/lib/python3.12/site-packages/openai/_base_client.py", line 1239, in post return cast(ResponseT, self.request(cast_to, opts, stream=stream, stream_cls=stream_cls)) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/Users/bswen/projects/PythonProjects/langchain-rag1/.venv/lib/python3.12/site-packages/openai/_base_client.py", line 1034, in request raise self._make_status_error_from_response(err.response) from Noneopenai.AuthenticationError: Error code: 401 - {'error': {'message': 'Authentication Fails, Your api key: **** is invalid', 'type': 'authentication_error', 'param': None, 'code': 'invalid_request_error'}}During task with name 'chatbot' and id '82a05b24-8d68-b3c6-7a83-c538aff44494'2. The code and solution

2.1 The code

Here is the main code for testing langgraph basic graph:

from typing import Annotatedfrom typing_extensions import TypedDictfrom langgraph.graph import StateGraph,STARTfrom langgraph.graph.message import add_messagesfrom langchain.chat_models import init_chat_model

default_api_key= "mykey....";default_base_url='https://api.siliconflow.cn/v1'default_model = "deepseek-ai/DeepSeek-V3"

# the state nodeclass State(TypedDict): messages: Annotated[list,add_messages]

graph_builder = StateGraph(State)

# init the chat modelllm = init_chat_model(model=default_model, api_key=default_api_key, base_url=default_base_url)

def chatbot(state:State): return {"messages": [llm.invoke(state["messages"])]}

graph_builder.add_node("chatbot", chatbot)graph_builder.add_edge(START, "chatbot")

# compile the graphgraph = graph_builder.compile()

def stream_graph_updates(user_input: str): for event in graph.stream({"messages": [{"role":"user", "content": user_input}]}): for value in event.values(): print("Assistant:", value["messages"][-1].content)

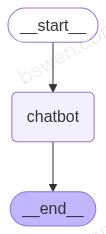

# start the graphwhile True: try: user_input = input("User: ") if user_input.lower() in ["quit", "exit", "q"]: print("Goodbye") break stream_graph_updates(user_input) except: user_input = "What do you know about LangGraph?" print("User: "+user_input) stream_graph_updates(user_input) breakI want to build a graph like this:

You can see that I have used the init_chat_model function to initialize a chat model as follows:

llm = init_chat_model(model=default_model, model_provider="openai", api_key=default_api_key, base_url=default_base_url)But when I run the code, I get the following error:

openai.AuthenticationError: Error code: 401 - {'error': {'message': 'Authentication Fails, Your api key: **** is invalid', 'type': 'authentication_error', 'param': None, 'code': 'invalid_request_error'}}During task with name 'chatbot' and id '82a05b24-8d68-b3c6-7a83-c538aff44494'2.2 The solution

Let’s view the documentation for init_chat_model:

Here is the source code for init_chat_model function:

def init_chat_model( model: Optional[str] = None, *, model_provider: Optional[str] = None, configurable_fields: Optional[ Union[Literal["any"], list[str], tuple[str, ...]] ] = None, config_prefix: Optional[str] = None, **kwargs: Any,) -> Union[BaseChatModel, _ConfigurableModel]: """Initialize a ChatModel from the model name and provider.

**Note:** Must have the integration package corresponding to the model provider installed.

Args: model: The name of the model, e.g. "o3-mini", "claude-3-5-sonnet-latest". You can also specify model and model provider in a single argument using '{model_provider}:{model}' format, e.g. "openai:o1". model_provider: The model provider if not specified as part of model arg (see above). Supported model_provider values and the corresponding integration package are:

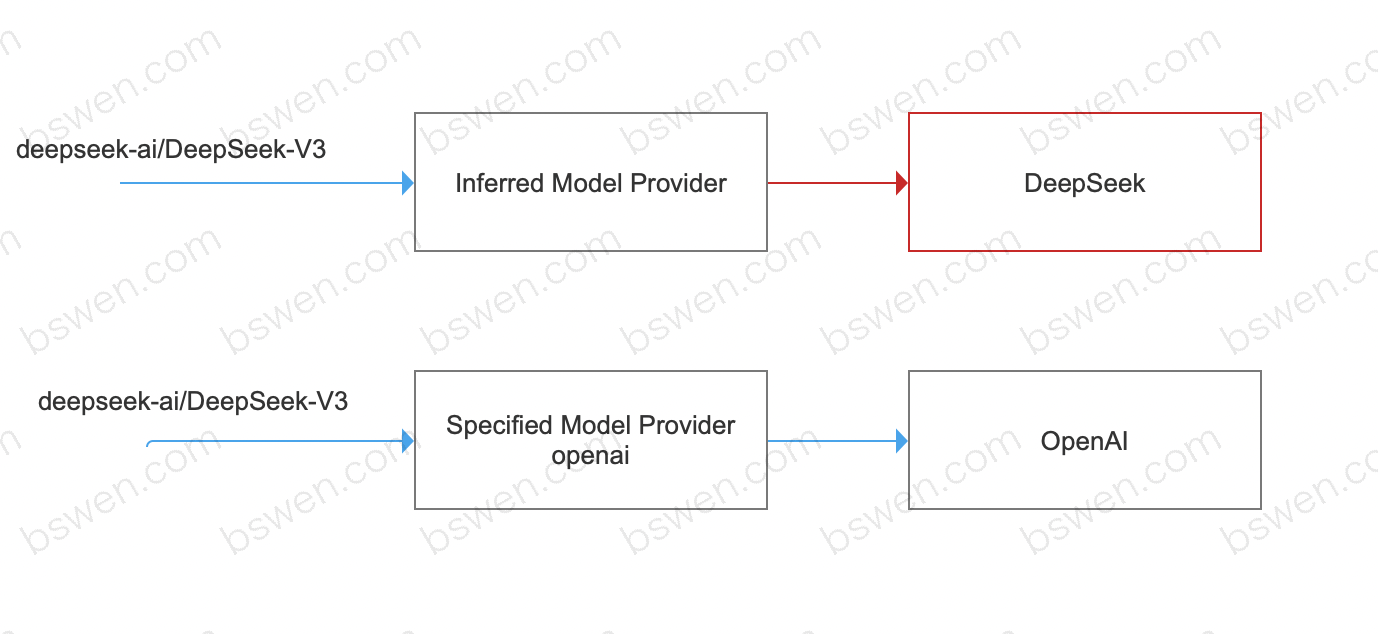

- 'openai' -> langchain-openai - 'anthropic' -> langchain-anthropic - 'azure_openai' -> langchain-openai - 'azure_ai' -> langchain-azure-ai - 'google_vertexai' -> langchain-google-vertexai - 'google_genai' -> langchain-google-genai - 'bedrock' -> langchain-aws - 'bedrock_converse' -> langchain-aws - 'cohere' -> langchain-cohere - 'fireworks' -> langchain-fireworks - 'together' -> langchain-together - 'mistralai' -> langchain-mistralai - 'huggingface' -> langchain-huggingface - 'groq' -> langchain-groq.....I am using a openAI compatible model from siliconflow, which is compatible with openAI. And I notice that the init_chat_model has a parameter named model_provider which will impact the model authentication process ,So I need to set model_provider to initialize a chat model as follows:

llm = init_chat_model(model=default_model, model_provider="openai", api_key=default_api_key, base_url=default_base_url)After setting model_provider to "openai", the code runs successfully.

The key solution is that, you should set the model_provider to "openai", if not, langgraph will use the inferred model provider to authenticate the model, which will be deepseek, and this is not the right model provider.

3. The theory

What’s the difference between langchain and langgraph?

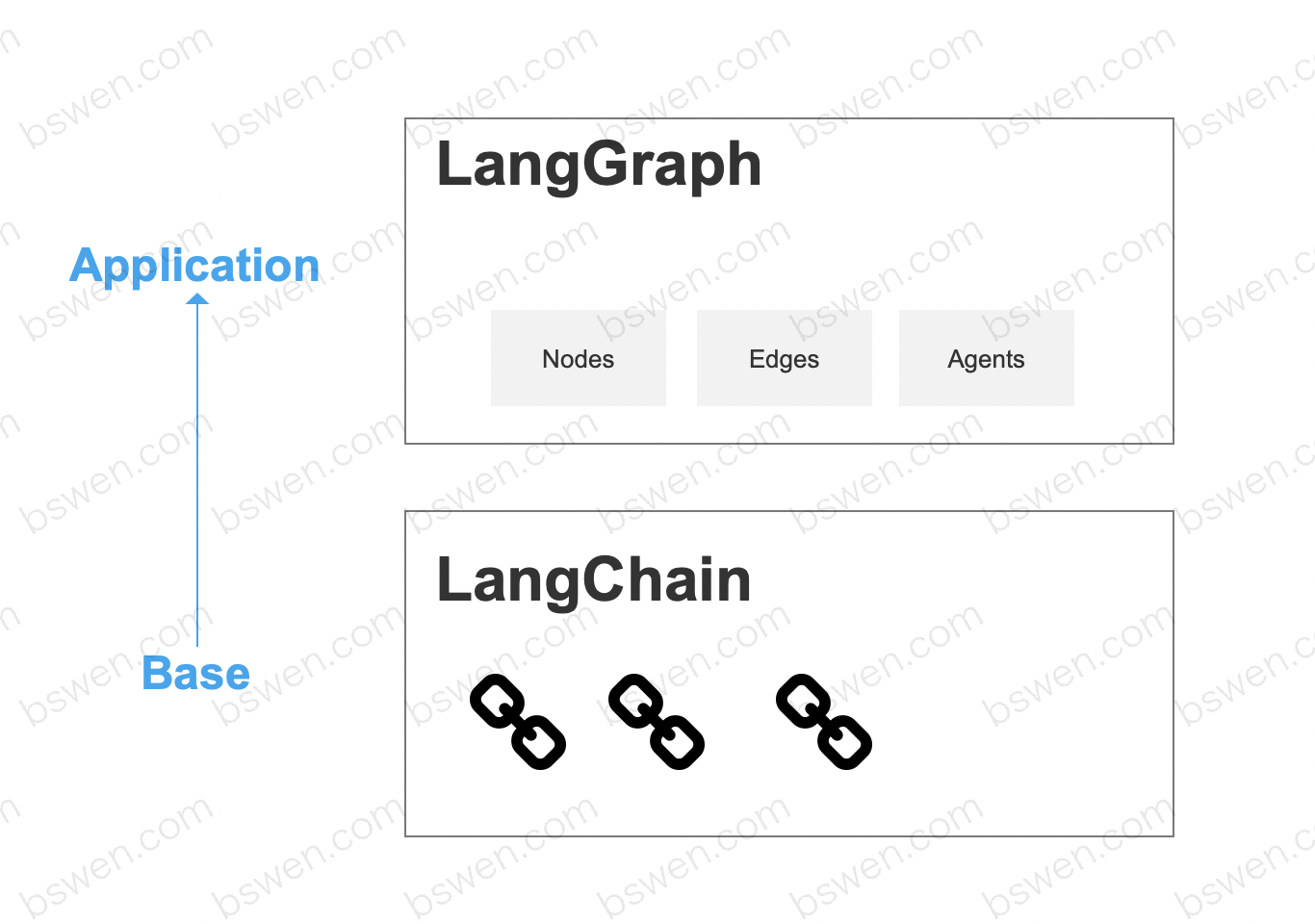

LangGraph is a graph-based language model, which is a new way to use language models. It is based on the idea that language models can be used to build graphs, and then the graphs can be used to generate text.

But langchain is a library for building LLM applications. It is a high-level library that provides a set of tools for building LLM applications. It is not a graph-based language model.

Here is a diagram shows the difference:

4. Summary

In this post, I have explained how to use langgraph to build a graph-based language model, and how to solve the authentication problem when using init_chat_model to initialize a chat model. You should pay attention to the model_provider parameter when using init_chat_model to initialize a chat model if you encounter authentication problems.

Final Words + More Resources

My intention with this article was to help others share my knowledge and experience. If you want to contact me, you can contact by email: Email me

Here are also the most important links from this article along with some further resources that will help you in this scope:

- 👨💻 init_chat_model api

- 👨💻 langgraph

Oh, and if you found these resources useful, don’t forget to support me by starring the repo on GitHub!